| NYU Hand Pose Dataset |  |

Overview

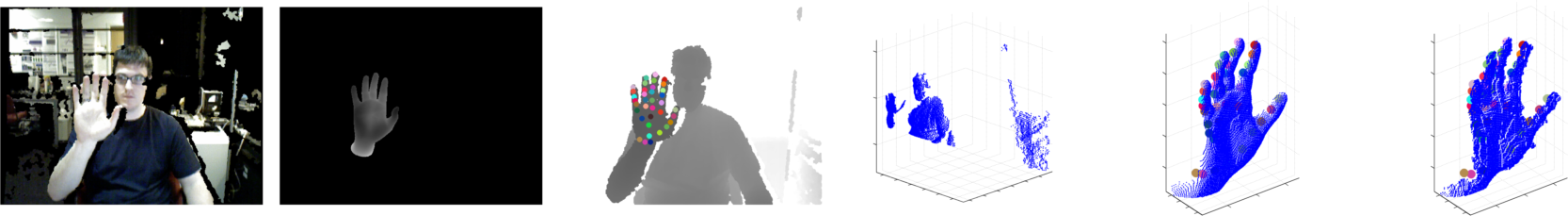

The NYU Hand pose dataset contains 8252 test-set and 72757 training-set frames of captured RGBD data with ground-truth hand-pose information. For each frame, the RGBD data from 3 Kinects is provided: a frontal view and 2 side views. The training set contains samples from a single user only (Jonathan Tompson), while the test set contains samples from two users (Murphy Stein and Jonathan Tompson). A synthetic re-creation (rendering) of the hand pose is also provided for each view.

We also provide the predicted joint locations from our ConvNet (for the test-set) so you can compare performance. Note: for real-time prediction we used only the depth image from Kinect 1.

The source code to fit the hand-model to the depth frames here can be found here

NEW: The dataset used to train the RDF is also public! It contains 6736 depth frames of myself doing various hand gesture (seated and standing) and the ground truth per-pixel labels (hand/not hand).

Citing the dataset

@article{tompson14tog,

author = {Jonathan Tompson and Murphy Stein and Yann Lecun and Ken Perlin}

title = {Real-Time Continuous Pose Recovery of Human Hands Using Convolutional Networks,

journal = {ACM Transactions on Graphics},

year = {2014},

month = {August},

volume = {33}

}Publication

|

|

|

| TOG'14 paper | SIGGRAPH'14 ppt |

Download

You can download the dataset here:

nyu_hand_dataset_v2.zip (92 GB)

Dataset format

The top level directory is structured as follows:

visualize_example.m- Example script: loading and displaying one data sampleevaluate_predictions.m- Example script: displaying our detector's predicted coordinates and performance-

testdepth_<k>_<f>.png- Test-set Depth frame<f>for<k>kinect.synthdepth_<k>_<f>.png- Test-set Synthetic depth frame<f>for<k>kinect.rgb_<k>_<f>.png- Test-set RGB frame<f>for<k>kinect.joint_data.mat- Matlab data containing:joint_names- Cell of strings containing the names of the 36 key hand locationsjoint_uvd- 4D Tensor containing the UVD location of each joint in the test-set framesjoint_xyz- 4D Tensor containing the XYZ location of each joint in the test-set frames

test_predictions.mat- Matlab data containing:conv_joint_names- Cell of string containing the names of locations tracked by the ConvNetpred_joint_uvconf- UV and confidence (likelihood) for each tracked joint

-

traindepth_<k>_<f>.png- Training-set depth frame<f>for<k>kinect.synthdepth_<k>_<f>.png- Training-set Synthetic depth frame<f>for<k>kinect.rgb_<k>_<f>.png- Training-set RGB frame<f>for<k>kinect.joint_data.mat- Matlab data containing:joint_names- Cell of strings containing the names of the 36 key hand locationsjoint_uvd- 4D Tensor containing the UVD location of each joint in the training-set framesjoint_xyz- 4D Tensor containing the XYZ location of each joint in the training-set frames

Note: In each depth png file the top 8 bits of depth are packed into the green channel and the lower 8 bits into blue.

*NEW*: RDF Download

You can download the dataset used to train the RDF here: